Today, Meta announced an update to protect young people from harm and said that it is working to promote safe, age-appropriate experiences for teens on Facebook and Instagram.

Also, new educational programmes and the development of tools to stop the spread of self-made personal photos online were announced. Earlier this year, Instagram announced new enhancements to protect users from abuse.

Updates to Limiting Unwanted Interactions

Company Last year, we discussed how to safeguard kids from questionable adults. We limit adults from messaging unconnected teens or seeing them in the “People You May Know” suggestions.

Also mentions that it is investigating strategies to prevent kids from messaging questionable adults they’re not connected to, and we won’t feature them in People You May Know recommendations.

A “suspicious” account is one that was recently blocked or reported by a child. We’re also trying to eliminate the messaging button from teens’ Instagram accounts when seen by suspected adults.

Encouraging Teens to Use Our Safety Tools

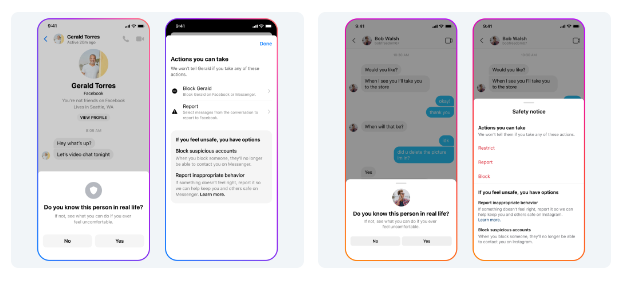

Meta has created mechanisms, so teens could tell us if something made them uncomfortable in our apps, and introduced new notifications to encourage them to use them. It will motivate kids to report blocked accounts and offer them safety notifications with tips on navigating improper adult messaging.

More than 100 million individuals viewed Messenger safety notifications in 2021. It also made our reporting options simpler to discover, resulting in a 70% increase in reports from minors in Q1 2022 on Messenger and Instagram DMs.

New Privacy Defaults for Teens on Facebook

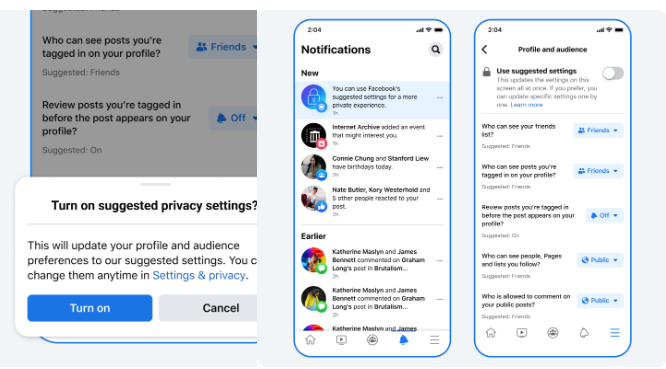

Everyone under 16 (or 18 in some countries) will be defaulted to more private settings when they join Facebook, and the company will further urge kids already on the app to pick these options for:

- Who can see their friends list

- Who can see the people, Pages and lists they follow

- Who can see posts they’re tagged in on their profile

- Reviewing posts they’re tagged in before the post appears on their profile

- Who is allowed to comment on their public posts

Meta says this decision follows similar privacy defaults for minors on Instagram and aligns with safety-by-design and “Best Interests of the Child” frameworks.

New Tools to Stop the Spread of Teens’ Intimate Images

Meta also offered an update on our initiative to curb the proliferation of minors’ intimate photographs online, especially when used to exploit them (called “sextortion”). We don’t want kids to share private photos without their permission because it can be traumatic.

- Meta is collaborating with the National Center for Missing and Exploited Children (NCMEC) to create a global platform for children who are concerned that their intimate photographs will be shared online without their permission.

- This platform will restrict the non-consensual sharing of intimate photographs among adults. It can be used by internet companies to prevent teens’ intimate photographs from being posted online.

- They worked closely with NCMEC, professionals, academics, parents, and victim advocates to design the platform, so kids can retake control of their content in awful situations.

Additionally, Meta is collaborating with Thorn and their NoFiltr brand to develop instructional materials that lessen the shame and stigma associated with private images and encourage teenagers to seek help and reclaim control if they have shared them or are the victims of sextortion.

New PSA campaign

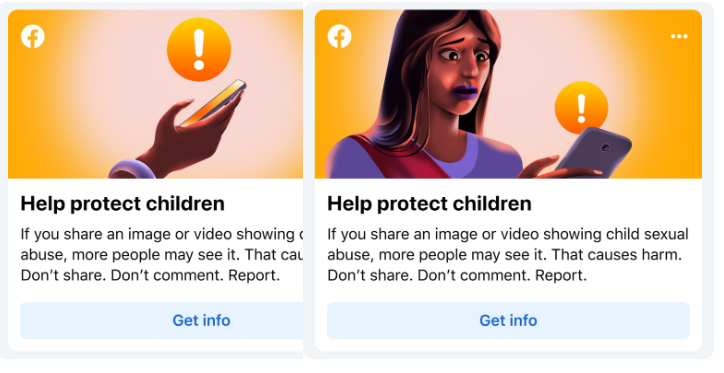

More than 75% of those we reported to NCMEC for sharing child exploitation content did so out of rage, bad humor, or disgust, with no obvious intent to harm. This content cannot be shared, regardless of purpose.

- A public service announcement campaign is also being planned to encourage people to stop resharing photographs online and instead report them.

- Visit our education and awareness resources, including the Stop Sextortion site on Facebook’s Safety Center, built with Thorn.

- Meta’s Family Center Education Center has guidance for parents on talking to teens about personal photographs.

Announcing the updates in a blog post, Meta shared that,

Today, we’re sharing an update on how we protect young people from harm and seek to create safe, age-appropriate experiences for teens on Facebook and Instagram.

In addition to our existing measures, we’re now testing ways to protect teens from messaging suspicious adults they aren’t connected to, and we won’t show them in teens’ People You May Know recommendations.