47% Indians Victims of AI Powered Voice Scams Report

A report by McAfee, The Artificial Imposter, reveals how AI technology can make voice clones with three seconds of audio. The report alerts of online voice scams and confirms McAfee’s forecasts about AI and Crypto scams in 2023.

McAfee study reveals the dangers of AI voice cloning scams

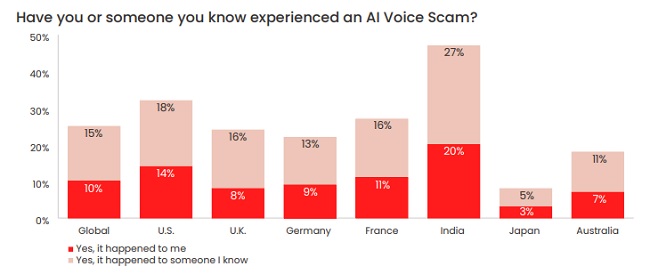

McAfee recently conducted a survey of 7,054 people across seven countries and found that 25% of adults have experienced AI voice scams, with 77% of those victims losing money. The study also revealed that scammers are using AI voice-cloning technology to send fake voicemails or calls to victims’ contacts, pretending to be in distress.

Responses and the cost of falling to AI voice scams

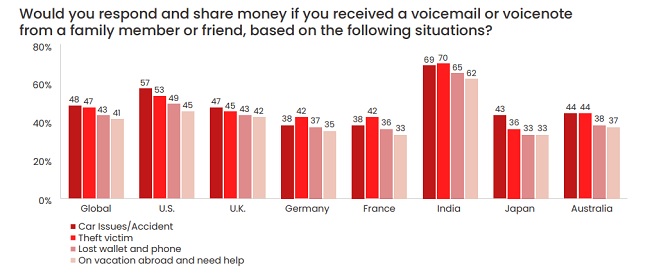

Additionally, nearly half of respondents said they would respond to a voicemail or voice note claiming to be from a loved one in need of money. The cost of falling for these scams can be high, with over a third of those who lost money reporting losses of over $1,000.

The rise of deepfakes and disinformation has also led to increased mistrust of social media, with 32% of adults saying they’re now less trusting than before.

India Most Affected by AI-Powered Voice Scams

MSI Research conducted the McAfee Artificial Imposter survey using an online questionnaire from April 13 to April 19, 2023. The survey consisted of 7,054 adults aged 18 and over from seven countries, including 1,010 respondents from India.

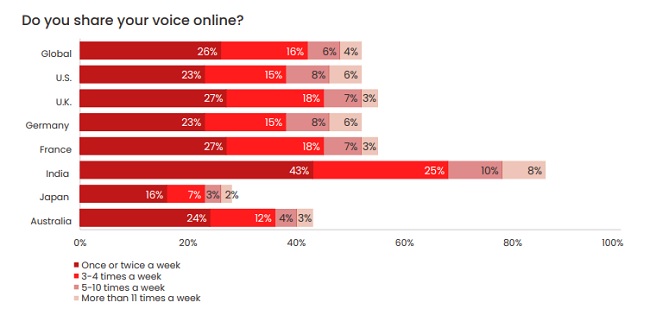

The survey found that the practice of making voices available online is most common in India, with 86% of people doing so at least once a week. Among the respondents, 47% reported either being a victim themselves (20%) or knowing someone who had (27%).

Various reports suggest that India is the country most affected by AI-powered voice scams, with 83% of victims losing money to cybercriminals who use AI to manipulate the voices of friends and family members.

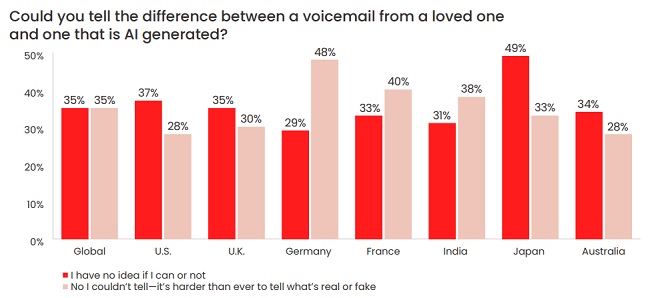

These scammers often prey on the trust and emotions of targets by pretending to be in distress and asking for urgent financial help. The inability of many Indians to differentiate between a genuine human voice and an AI-generated voice makes them particularly vulnerable to such frauds.

McAfee Labs Research Finds Voice Cloning is Easier Than You Think

Voice cloning, a technique that uses artificial intelligence to mimic someone’s voice, has become more accessible and easier to use, according to a recent study conducted by McAfee researchers. They found over a dozen freely available voice-cloning tools online, with some requiring only a basic level of expertise to use.

With just three seconds of audio, one tool produced an 85% voice match, and by training the data models, a 95% voice match was achieved. Cybercriminals could use voice cloning to exploit emotional vulnerabilities, particularly those in close relationships, and scam people out of thousands of dollars in a matter of hours.

Although AI voice cloning tools can easily replicate accents from around the world, distinctive voices with unique pace, rhythm, or style are harder to copy. However, the ease of access to these tools means that the barrier to entry for cybercriminals has never been lower.

To protect yourself from AI voice cloning, McAfee recommends:

- Set a verbal ‘codeword’ with trusted friends and family to ask for if they call or text asking for help.

- Always question the source of calls, texts, or emails, especially from unknown senders.

- Be careful about who you share personal information with online.

- Use identity monitoring services to ensure your personal information is not accessible or being used maliciously.

Check out the complete survey data, including country-specific results, here.

Speaking on the report, Steve Grobman, McAfee CTO, said:

AI technology presents immense possibilities, but unfortunately, it can also be used for malicious purposes in the wrong hands. Cybercriminals are taking advantage of the accessibility and simplicity of AI voice cloning tools to deceive people into sending money or personal information. It’s crucial to remain alert and take necessary precautions to safeguard yourself and your loved ones.

To confirm the authenticity of a call from a family member or spouse asking for financial help, use a pre-determined codeword or ask a question only they would know. Additionally, identity and privacy protection services can help restrict personal information that can be utilized by cybercriminals to create a convincing voice clone.